Identity Verification using Artificial Intelligence

The Challenge

For most businesses, it is important to identify and verify the identity of their clients (aka KYC Checks) in order to mitigate the potential risks that these clients present to the business.

KYC (know your customer) checks is a common process used in most businesses. The motivation behind these checks is to ensure that they know what their customers are, what type of activity is expected from a certain customer and also the type of risk they bring to the business. Such checks are important to ensure the sustainability of the business. This, however, is a long and a tiresome process. A dedicated team is usually required by businesses to perform the verification and identification process.

With modern AI technology, most of this work can now be automated. An AI system can solve this time consuming process in a matter of a few minutes.

Let’s see how an AI based identification and verification system can help solving this problem.

The Solution

We built an identity verification and liveness detection system using Machine Learning and Computer Vision. The AI system has two parts; identity verification and liveness detection. Identity verification verified the authenticity and validity of the ID and the person and liveness detection checks if the person is who he/she claims to be and not some impersonator. The AI system was deployed as a micro-service for our client where API calls could be made from their web and mobile application for KYC checks.

With our AI based system, this is how the KYC checks have been automated.

STEP 1 : Identity Verification

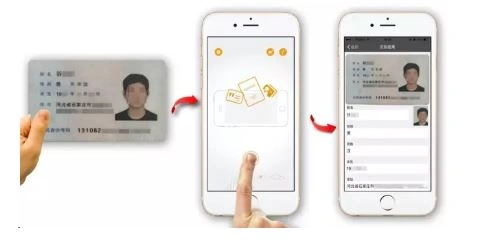

The process begins by the customer taking an image of their ID card using their phone camera. The ID card has various details about the customer in the form of text. This information is important to verify whether the ID card is not valid.

Next we need to convert the image into computer readable text so that verification can be performed. We use Optical Character recognition to extract useful information from the ID card (OCR). OCR is the process of extracting texts from images such as scanned documents or other type of photos with written texts. The system has been able to achieve an accuracy of 95% for this process.

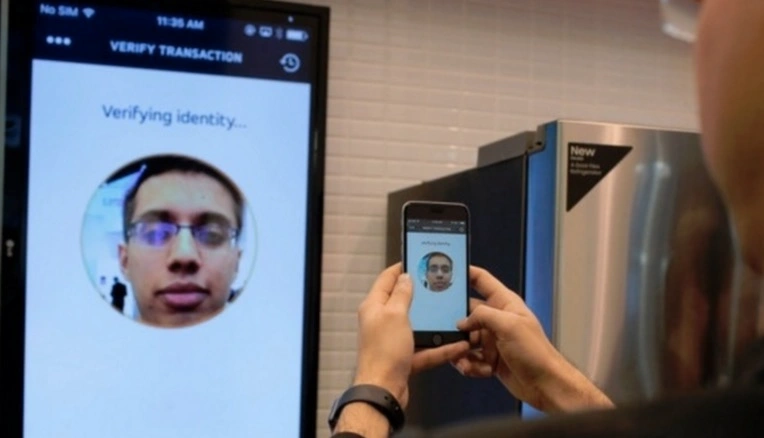

Next, the customer also takes a selfie using the phone for this process. A convolutional neural network is used for facial recognition. We verify that the picture taken is the same as that extracted from the ID.

STEP 2 – Liveness Detection

The final step is to detect the liveness of the customer. In this step, the customer records a 2-3 seconds video. A face is extracted from the video using a face extraction CV model. Only after a valid face has been found in the video is it further processed. Next the system perform preprocessing operations on the video and removes redundant frames to reduce the processing time . The AI system then verifies that the video was indeed shot in a live environment. The purpose of liveness detection is to make sure that the person in the first step is the same person who is doing the KYC checks thereby eliminating the chances of any impersonation. We use a large scale self-collected dataset consisting of 20000 videos to train our system for liveness detection. The output of the liveness detection model is the probability of video being shot in a live environment.

The Technical Bit

Check digits from MRZ data are validated to prove the authenticity of the ID. Once it has been verified that the ID is valid, the next step is to match the picture on the ID card to the selfie. This is done using deep learning model (an efficient AI technique inspired by the working of human brain) trained for facial recognition. The key to good performance of a deep learning model is to train it with as much data as possible.

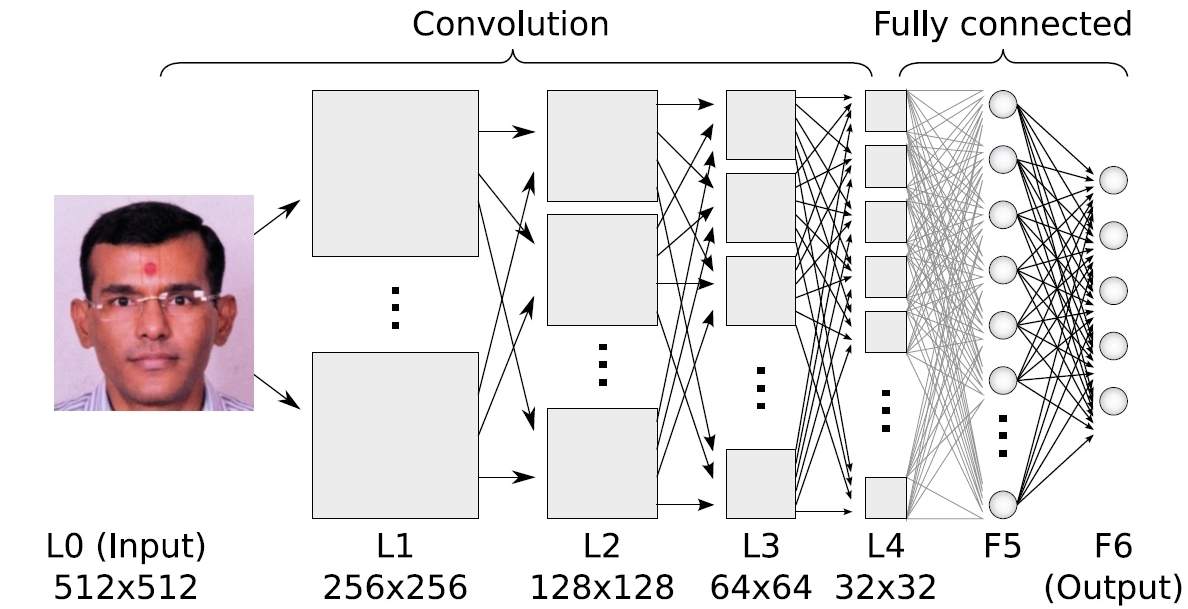

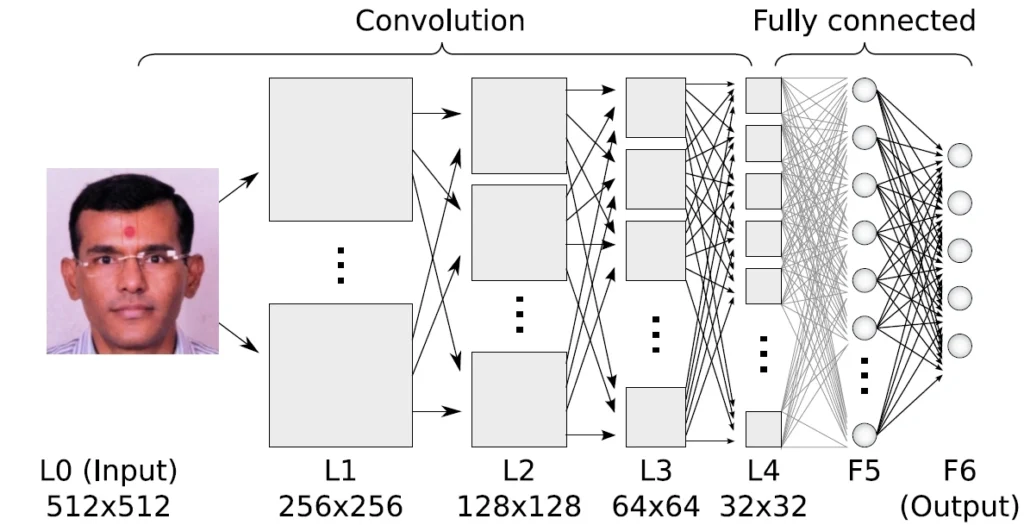

Our dataset consists of thousands of images to train the system thereby exposing it to diversity for improving the accuracy. The model indicates the probability of similarity of the two photos (the one in the ID card and the selfie). Here is how a deep learning model for face recognition looks like

We take advantage of Convolutional Neural Networks (CNNs) to extract features from our dataset. CNNs are deep neural networks which are used primarily used for classification of images, clustering of similar images and also object detection in different scenes . CNNs are major contributors in the field of computer vision (CV). Each layer in a CNN network extract complex information about the image that has it has been given as a input. This allows CNNs to extract a variety of useful features which are used in this identification and verification process. With the help of very deep convolutional neural networks, we get all sort of high and low level features which are then used to create a super-efficient system to tackle the facial recognition and liveness detection problem.

The Results

As you might have guessed by now, the whole process explained above if not automated is going to cost the businesses a lot of time and other resources. We have made an efficient AI based Identity verification system to save you all this trouble and make your life easier. In a nutshell,

- Integrate the system in your existing infrastructure and perform KYC checks efficiently.

- Use it as a standalone application to perform identification and verification operations for any other business requirement.